GitHub Trending Repos: Top Open Source Radar (2025-11-17)

By devkit.best on 2025-11-17

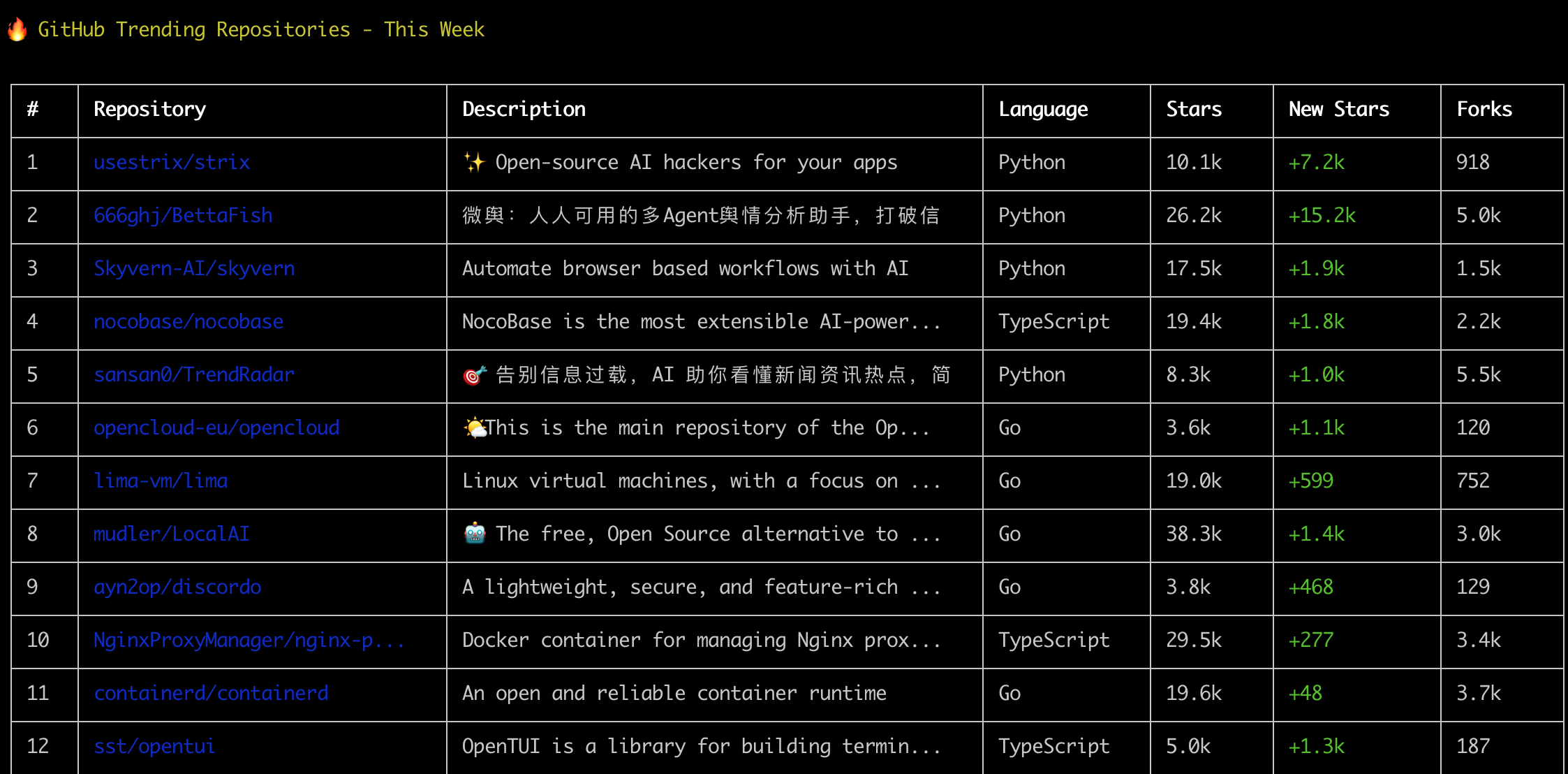

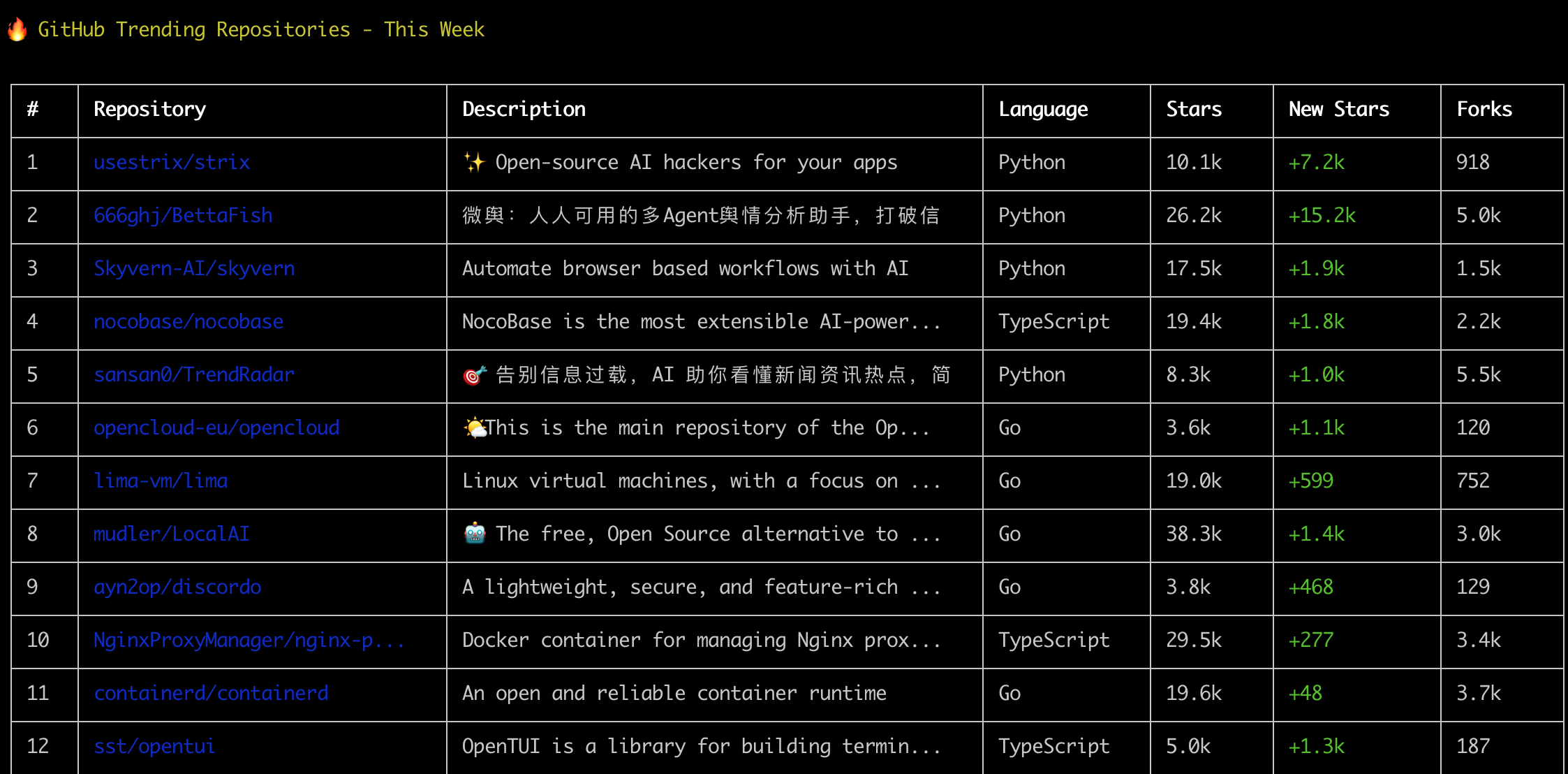

This Week’s GitHub Trending Repositories (2025-11-17)

Developers are flooded with new open source projects every week—and it’s hard to know what deserves attention. This curated look at the GitHub Trending Repositories for 2025-11-17 pinpoints what matters now: AI agents for security, browser automation, no/low-code platforms, self-hosted LLMs, container tooling, and lightweight TUIs. Whether you’re a developer shipping features, a technical decision-maker evaluating bets, or a maintainer scanning ecosystem shifts, this report distills signal from noise.

What you’ll get:

- A clear overview of weekly themes across Open Source Projects

- Practical feature breakdowns, best-fit use cases, and quick starts

- A comparison table across 11 dimensions to speed decision-making

- Guidance to select the right Developer Tools for your stack

If you want more vetted tools, explore our AI and DevOps collections on DevKit.best for deeper evaluations and roadmaps:

- Curated AI development tools: https://devkit.best/category/ai

- DevOps and platform engineering picks: https://devkit.best/category/devops

- Self-hosted architectures and guides: https://devkit.best/blog/self-hosted-ai-guide

CTA: Want a personalized shortlist? Visit https://devkit.best/ to get curated recommendations aligned to your use case.

Weekly Trends Overview: What’s Driving the Spike

Several themes unify this week’s GitHub Trending Repositories:

- Security + AI agents: strix automates penetration testing—an emerging pattern where AI agents operationalize infosec workflows.

- Multi-agent OSINT and trend analysis: BettaFish and TrendRadar focus on information synthesis, sentiment, and forecasting to counter information overload.

- Browser automation with AI: skyvern helps teams automate brittle web workflows without writing dozens of selectors and scripts.

- No/low-code platforms with AI: nocobase shows momentum in enterprise app scaffolding with an extensible plugin system.

- Self-hosted AI and inference: LocalAI and ktransformers push local-first LLM inference without proprietary dependencies.

- Solid DevOps foundations: containerd, nginx-proxy-manager, alertmanager, lima—reliability, observability, and simpler networking for modern infra.

- TUI renaissance: opentui and discordo highlight a growing trend—efficient, scriptable, terminal-first experiences for developers.

These Weekly Trending projects are suited for teams modernizing pipelines, hardening security, or moving to self-hosted AI stacks.

Repository-by-Repository Analysis

Note: Star counts reflect the snapshot provided in this week’s list. Always review each repo’s README, issues, and release notes for the latest details.

1) strix (Python) — AI Agents for Penetration Testing

- Feature Overview: Open-source AI agents for pentesting tasks across recon, exploitation, and reporting.

- Core Features:

- Automated reconnaissance and vulnerability triage

- Agentic workflows to chain tasks

- Extensible modules for new targets or methods

- Python ecosystem and CLI-friendly

- Use Cases:

- Security engineers augmenting red-team workflows

- DevSecOps teams validating CI/CD and staging environments

- Educators demonstrating practical AI security automation

- Technical Highlights:

- Agent patterns reduce manual toil in enumeration and validation

- Modular architecture invites contributions

- Quick Start Guide:

- git clone https://github.com/usestrix/strix

- cd strix

- Review README for environment setup, API keys if required, and sample runs

- External repo: https://github.com/usestrix/strix

2) BettaFish (Python) — Multi-Agent Public Opinion Analysis

- Feature Overview: “微舆” multi-agent assistant for public opinion analysis; aims to break echo chambers and forecast trends.

- Core Features:

- Multi-platform news and social data insight

- Forecasting and decision-support emphasis

- No external framework dependency; from-scratch implementation

- Use Cases:

- Policy teams, research analysts, comms professionals

- Enterprises monitoring brand sentiment and risks

- Technical Highlights:

- Multi-agent architecture for synthesis and reasoning

- Designed to work end-to-end with minimal assumptions

- Quick Start Guide:

- git clone the repository

- cd BettaFish

- Follow README for data sources, config, and first analysis run

3) skyvern (Python) — AI Browser Workflow Automation

- Feature Overview: Automate web workflows (logins, form fills, scraping) using AI rather than hand-coded selectors.

- Core Features:

- Declarative workflow authoring

- State-aware browser actions and retries

- Observability into runs and errors

- Use Cases:

- Ops teams automating repetitive web tasks

- QA for scenario coverage where UI changes frequently

- Internal tools needing web integrations without custom code

- Technical Highlights:

- AI-driven action planning to reduce brittle scripts

- Python integration makes it CI/CD-friendly

- Quick Start Guide:

- Clone repo

- Set up Python environment per README

- Run sample workflows and iterate

4) nocobase (TypeScript) — Extensible AI-Powered No/Low-Code Platform

- Feature Overview: Build enterprise apps with a plugin ecosystem and AI-assisted creation flows.

- Core Features:

- Plugin architecture and schema-driven app building

- Data modeling, workflows, and role-based access

- AI helpers for scaffolding and automation

- Use Cases:

- Internal tools, CRMs, back-office systems

- Teams that need quick iteration with guardrails

- Technical Highlights:

- TypeScript stack and modularity for customization

- Extensibility aligns with enterprise needs

- Quick Start Guide:

- Clone repo

- Start with Docker or Node per README

- Install core plugins and build your first model

- External repo: https://github.com/nocobase/nocobase

5) TrendRadar (Python) — AI-Powered Trend Monitoring and Analysis

- Feature Overview: Aggregates hotspots across 35+ platforms (e.g., TikTok, Zhihu, Bilibili) with AI filtering and analysis.

- Core Features:

- Intelligent filtering, auto-push to multiple channels

- MCP-based analysis tools: trend tracking, sentiment, similarity search

- Quick web deploy; Docker support; mobile notifications

- Use Cases:

- Media teams, investment researchers, risk monitoring desks

- Companies needing proactive alerting and summarization

- Technical Highlights:

- Multi-channel notification integration

- Designed for low-friction deployment

- Quick Start Guide:

- Clone repo

- Use Docker compose per README

- Configure sources and push channels

6) opencloud (Go) — Backend for the OpenCloud Server

- Feature Overview: Golang backend services for OpenCloud—focus on core server logic.

- Core Features:

- Modular service components

- Cloud-oriented architecture primitives

- Strongly typed Go codebase for reliability

- Use Cases:

- Platform teams building internal cloud services

- Contributors exploring cloud backend patterns

- Technical Highlights:

- Go microservices patterns and interfaces

- Emphasis on maintainability

- Quick Start Guide:

- Clone repo

- Review services and makefile tasks

- Follow README to run locally with Go toolchain

7) lima (Go) — Linux VMs Focused on Container Workloads

- Feature Overview: Provision Linux virtual machines optimized for containers on macOS and beyond.

- Core Features:

- Lightweight VM orchestration

- Container runtime support and host integration

- YAML-based profiles for repeatable environments

- Use Cases:

- Developers on macOS running container tooling natively

- Teams standardizing dev environments across laptops

- Technical Highlights:

- Lean, reproducible VM lifecycle

- Integrates with Docker and containerd ecosystems

- Quick Start Guide:

- Install lima

- Create a YAML instance config from examples

- Start VM and run containers inside

8) LocalAI (Go) — Self-Hosted OpenAI/Claude Alternative

- Feature Overview: Local-first inference server acting as a drop-in OpenAI-compatible API—no GPU required.

- Core Features:

- Text, audio, image, video generation; voice cloning

- Supports gguf, transformers, diffusers, distributed and P2P inference

- Runs on consumer-grade hardware

- Use Cases:

- Privacy-sensitive orgs deploying on-prem LLMs

- Prototypers building AI features without vendor lock-in

- Technical Highlights:

- OpenAPI-compatible surface simplifies integration

- Efficient inference pipelines for CPU-first setups

- Quick Start Guide:

- Clone repo

- Start via Docker or binary following README

- Point your existing OpenAI client to LocalAI

- External repo: https://github.com/mudler/LocalAI

9) discordo (Go) — Discord Terminal Client (TUI)

- Feature Overview: Lightweight, secure Discord TUI for keyboard-driven power users.

- Core Features:

- Minimal resource footprint

- Secure auth handling

- Rich TUI with channels, DMs, and commands

- Use Cases:

- Terminal-centric users and remote environments

- Automation or scripting for community moderators

- Technical Highlights:

- Go + TUI libs for performance and portability

- Security-first posture for credentials

- Quick Start Guide:

- Clone repo

- Build with Go per README

- Configure token securely and launch

10) nginx-proxy-manager (TypeScript) — GUI for Nginx Reverse Proxy

- Feature Overview: Dockerized UI to manage Nginx proxy hosts with SSL and access control.

- Core Features:

- Simple UI for hosts, SSL certs, redirections

- Docker-first deployment

- Multi-domain, multi-service routing

- Use Cases:

- Home labs to SMEs needing clean ingress management

- Teams standardizing reverse proxy setups

- Technical Highlights:

- TypeScript UI and modern UX

- Streamlines Let’s Encrypt and Nginx config complexity

- Quick Start Guide:

- Use Docker compose from README

- Access web UI and configure first host

- Add SSL and routing rules

11) containerd (Go) — Production-Grade Container Runtime

- Feature Overview: A reliable, open container runtime widely used in cloud-native stacks.

- Core Features:

- OCI-compliant runtime and image management

- CRI integration for Kubernetes

- Mature ecosystem and stability

- Use Cases:

- Kubernetes clusters, CI systems, edge deployments

- Any platform standardizing on OCI runtimes

- Technical Highlights:

- Core CNCF building block with robust APIs

- Proven performance characteristics

- Quick Start Guide:

- Install via distro packages or binaries

- Configure per environment (CRI/K8s)

- Validate with basic image pull/run flows

12) opentui (TypeScript) — Library for Building Terminal UIs

- Feature Overview: Build modern TUIs in TypeScript for portable, scriptable apps.

- Core Features:

- Components for panels, lists, forms

- Event-driven patterns for interactivity

- Cross-platform terminal support

- Use Cases:

- CLI apps that need richer UX

- Internal tools for operations and support

- Technical Highlights:

- TypeScript type-safety and dev velocity

- Declarative API to speed layout work

- Quick Start Guide:

- Install via package manager

- Import components and render a simple TUI

- Iterate layout and add event handlers

13) alertmanager (Go) — Prometheus Alert Routing and Silencing

- Feature Overview: Central alert handling for Prometheus with routing, dedup, and silences.

- Core Features:

- Routing rules per severity/team/channel

- Silence windows and inhibition

- Integrations with email, chat, and webhooks

- Use Cases:

- SRE and NOC teams needing sane alert hygiene

- Platform monitoring across environments

- Technical Highlights:

- Proven at scale in cloud-native stacks

- Declarative config for repeatability

- Quick Start Guide:

- Deploy via Docker or binary

- Configure receivers and routes

- Test with sample Prometheus alerts

14) ktransformers (Python) — LLM Inference Optimization Framework

- Feature Overview: Flexible playground to experience state-of-the-art optimizations for LLM inference.

- Core Features:

- KV-cache strategies and batching improvements

- Hooks for experimentation with new kernels

- Educational examples for performance trade-offs

- Use Cases:

- Researchers and infra engineers optimizing inference

- Teams cutting latency on local or cloud GPUs

- Technical Highlights:

- Modular design to A/B different optimizations

- Focus on transparent, reproducible benchmarking

- Quick Start Guide:

- Clone repo

- Prepare Python env and models per README

- Run benchmark scripts and compare settings

15) go-sdk (Go) — MCP (Model Context Protocol) SDK

- Feature Overview: Official Go SDK for MCP servers and clients, maintained with Google.

- Core Features:

- Server and client utilities for MCP

- Strong typing and interfaces for protocol implementations

- Examples to bootstrap projects

- Use Cases:

- Tool builders integrating MCP into agents

- Backend services exposing MCP capabilities

- Technical Highlights:

- Canonical SDK reduces integration drift

- Go ergonomics for reliability

- Quick Start Guide:

- go get SDK per README

- Initialize client/server scaffolds

- Implement handlers and run examples

16) dbeaver (Java) — Universal Database and SQL Client

- Feature Overview: Cross-platform database tool supporting a wide range of engines.

- Core Features:

- Rich SQL editor, ER diagrams, data import/export

- Extensions for NoSQL and cloud databases

- Team-friendly features and themes

- Use Cases:

- Data engineers, DBAs, and developers

- Teams needing a unified DB client across engines

- Technical Highlights:

- Mature plugin ecosystem

- Broad driver support

- Quick Start Guide:

- Download installer or use package manager

- Connect to a database with provided drivers

- Explore schema and run queries

17) cognee (Python) — Memory for AI Agents in ~6 Lines

- Feature Overview: Lightweight memory layer to give agents recall and context.

- Core Features:

- Simple API for storing and retrieving context

- Pluggable backends

- Minimal boilerplate for agent frameworks

- Use Cases:

- Agent developers adding persistent memory

- Prototypers building context-aware assistants

- Technical Highlights:

- Emphasis on simplicity over heavy infra

- Plays well with existing Python AI stacks

- Quick Start Guide:

- Clone repo

- Install per README

- Add memory calls to your agent in a few lines

Comparison Table: Editorial Overview

Note: Activity, learning curve, community, and scores are editorial guidance based on project scope and ecosystem maturity. Verify fit with your context.

| Repository Name | Primary Purpose | Programming Language | Stars Count | Activity Level | Best Use Cases | Learning Curve | Community Support | Advantages (✅) | Limitations (❌) | Recommendation Score |

|---|---|---|---|---|---|---|---|---|---|---|

| strix | AI pentesting agents | Python | 11568 | High (Trending) | Security automation | High | Growing | Automates recon/exploit tasks | Needs security expertise | 8.7/10 |

| BettaFish | Multi-agent public opinion analysis | Python | 27561 | High (Trending) | Sentiment/trend analysis | Medium | Large | Forecasting + multi-source insight | Data source localization | 8.6/10 |

| skyvern | AI browser automation | Python | 18127 | High (Trending) | Web workflow automation | Medium | Growing | Less brittle than scripts | Complex UIs may need tuning | 8.4/10 |

| nocobase | AI-powered no/low-code platform | TypeScript | 19589 | High (Trending) | Internal apps/enterprise tools | Medium | Large | Extensible plugin system | Governance required at scale | 8.8/10 |

| TrendRadar | AI trend aggregation + alerts | Python | 15330 | High (Trending) | Monitoring, OSINT | Medium | Growing | Multi-channel push + MCP tools | Platform changes may break scrapes | 8.3/10 |

| opencloud | Backend services for OpenCloud | Go | 3830 | High (Trending) | Cloud backend dev | High | Growing | Clean Go services baseline | Early-stage integration work | 7.9/10 |

| lima | Linux VMs for containers | Go | 19113 | High (Trending) | Dev envs on macOS | Medium | Mature | Reproducible, lightweight VMs | VM networking edge cases | 8.5/10 |

| LocalAI | Self-hosted OpenAI-compatible | Go | 38597 | High (Trending) | On-prem LLM APIs | Medium-High | Large | CPU-friendly, broad model support | Model quality varies | 9.0/10 |

| discordo | Discord terminal client | Go | 3894 | High (Trending) | Terminal-first chat | Low-Medium | Niche/Growing | Fast, secure, minimal | Limited rich media | 7.8/10 |

| nginx-proxy-manager | GUI for Nginx | TypeScript | 29637 | High (Trending) | Reverse proxy UI | Low-Medium | Large | Easy SSL and routing | Advanced edge cases need Nginx | 8.6/10 |

| containerd | Container runtime | Go | 19651 | High (Trending) | K8s, CI, edge | High | Mature | Proven runtime foundation | Ops complexity for newcomers | 9.1/10 |

| opentui | Build TUIs | TypeScript | 5164 | High (Trending) | Rich CLI tools | Medium | Growing | DX of TS + TUI components | Terminal UX limits | 8.0/10 |

| alertmanager | Prometheus alerts | Go | 8076 | High (Trending) | SRE alert routing | Medium | Mature | Routing, silences, inhibition | Requires Prometheus ecosystem | 8.7/10 |

| ktransformers | LLM inference optimizations | Python | 15717 | High (Trending) | Perf research & ops | High | Growing | Transparent optimization tests | Hardware-specific tuning | 8.5/10 |

| go-sdk | MCP SDK | Go | 3011 | High (Trending) | MCP servers/clients | Medium | Growing | Canonical SDK, examples | Ecosystem still evolving | 8.2/10 |

| dbeaver | Universal DB client | Java | 46931 | High (Trending) | DB development | Low | Large | Wide driver support | Heavy for simple tasks | 9.0/10 |

| cognee | Agent memory in 6 lines | Python | 8645 | High (Trending) | Lightweight agent memory | Low-Medium | Growing | Minimal API, fast integration | Limited advanced features | 8.1/10 |

Use Cases and Best Practices

- Harden security with AI-driven pentesting

- Challenge: Manual recon and exploit testing doesn’t scale across microservices.

- Solution: Use strix to chain agentic tasks for recon, validation, and reporting.

- Best Practice: Run in a sandbox; log all interactions; integrate into CI with clear scoping.

- Expected Outcome: Faster detection of misconfigurations and repeatable security checks.

- Counter information overload for comms and risk teams

- Challenge: Tracking public sentiment across dozens of platforms is noisy and slow.

- Solution: Use TrendRadar for aggregation + alerts; layer BettaFish for deeper trend forecasting.

- Best Practice: Start with a handful of critical sources; iterate filters; set push rules to chat/Email.

- Expected Outcome: Timely signal with less false positive noise; sharper decision memos.

- Ship internal tools quickly with low-code + TUIs

- Challenge: Stakeholders need dashboards and workflows, but engineering bandwidth is tight.

- Solution: Use nocobase to scaffold data models and CRUD; pair with opentui for terminal ops tooling.

- Best Practice: Standardize plugins and RBAC; keep a style guide for TUI patterns.

- Expected Outcome: Weeks to days turnaround for internal apps; consistent UX across teams.

- Self-host LLMs to reduce cost and preserve privacy

- Challenge: Vendor APIs are costly and sensitive data can’t leave the perimeter.

- Solution: Deploy LocalAI as a drop-in OpenAI-compatible endpoint; experiment with ktransformers for latency gains; add cognee for agent memory.

- Best Practice: Start CPU-only for POCs; measure latency and quality; gradually add model variants and memory.

- Expected Outcome: Vendor independence, controllable cost profile, and privacy by default.

- Solidify DevOps foundations for scale

- Challenge: Fragmented container tooling and noisy alerts slow incident response.

- Solution: Use containerd as the runtime, nginx-proxy-manager for ingress simplicity, alertmanager for routing and silences, and lima to standardize dev envs.

- Best Practice: Version-lock infra components; keep alert runbooks; model ingress in code.

- Expected Outcome: Faster, safer releases; fewer on-call pages; easier developer onboarding.

For more implementation guides, see:

- Practical DevOps stack picks: https://devkit.best/category/devops

- AI engineering toolkits: https://devkit.best/category/ai

- Self-hosted AI step-by-step: https://devkit.best/blog/self-hosted-ai-guide

How to Choose the Right Project for You

Use this decision framework to evaluate the GitHub Trending Repositories for 2025-11-17:

- Need security automation? Choose strix if you have pentesting expertise and want repeatable AI-driven checks.

- Automating web tasks? skyvern is ideal when UIs change frequently and selector maintenance becomes brittle.

- Internal apps fast? Pick nocobase for schema-first apps with plugin extensibility. Combine with nginx-proxy-manager for quick ingress.

- Self-host AI? Start with LocalAI for an OpenAI-compatible endpoint on CPUs; add ktransformers to tune inference; use cognee to give agents memory.

- Data/DB workflows? dbeaver is the universal client that fits most teams out of the box.

- Platform engineering? containerd and alertmanager are production-grade foundations. Use lima to harmonize developer environments.

- Terminal-first workflows? opentui and discordo provide ergonomic TUIs for power users and remote operations.

Evaluation checklist:

- Fitness to your scenario (problem-solution fit)

- Operational complexity (learning curve + run cost)

- Ecosystem maturity (docs, examples, issue velocity)

- Integration surface (APIs, SDKs, protocol compatibility)

- Governance and security posture (RBAC, auditability)

FAQ

Q1: What are GitHub Trending Repositories, and why do they matter this week?

A: GitHub Trending Repositories highlight projects gaining attention right now. For 2025-11-17, we see strong momentum in AI agents, self-hosted AI, and DevOps tooling. Use this snapshot to shortlist options, then validate fit with your stack. For deeper curation by category, browse our AI and DevOps hubs at https://devkit.best/category/ai and https://devkit.best/category/devops.

Q2: Which projects are best for enterprises starting with low-code and AI?

A: nocobase stands out for extensible enterprise apps and plugin-based governance. Pair it with alertmanager and nginx-proxy-manager for operational guardrails. If you’re exploring self-hosted AI APIs, LocalAI offers a low-friction entry point. See our self-hosted AI guide: https://devkit.best/blog/self-hosted-ai-guide.

Q3: Are these repositories production-ready?

A: Maturity varies. containerd, alertmanager, dbeaver, and nginx-proxy-manager are widely used and well-understood. Projects like strix, BettaFish, skyvern, and opencloud may be earlier-stage or domain-specific—great for pilots with clear boundaries. Always review docs, issues, and release cadence.

Q4: How can I evaluate community health and long-term viability?

A: Check documentation quality, recent commits, issue responsiveness, and contributor diversity. Prefer projects with clear roadmaps, tests, and release notes. Our category pages aggregate projects with a bias for clarity and maintainability: https://devkit.best/category/open-source and https://devkit.best/category/devops.

Q5: What’s the fastest path to a self-hosted AI stack this week?

A: Start with LocalAI for an OpenAI-compatible endpoint, add ktransformers to experiment with inference optimizations, and use cognee to give agents memory. Keep your first deployment simple (CPU-only), profile performance, then iterate. For step-by-step help: https://devkit.best/blog/self-hosted-ai-guide.

Final Thoughts and Next Steps

This week’s GitHub Trending Repositories surface a clear trajectory: practical AI (security, automation, self-hosted inference) layered onto proven DevOps foundations. The best results come from combining a few high-fit components rather than adopting everything at once.

- Start with a crisp problem statement and a 2–4 week pilot.

- Pick one project per layer (e.g., LocalAI for inference, nocobase for apps, alertmanager for observability).

- Measure outcomes (latency, cost, incident rate, time-to-value), then scale.

CTA: Ready to choose with confidence? Explore curated tool stacks and actionable playbooks at https://devkit.best/ and get a head start on your roadmap.

External references (authoritative GitHub repositories):

- strix: https://github.com/usestrix/strix

- nocobase: https://github.com/nocobase/nocobase

- LocalAI: https://github.com/mudler/LocalAI